Computer science,Scalar processor,Flynn's taxonomy,vector processor,superscalar processor,Parallel computing,Memory hierarchy,Virtual memory,Shared memory,advanced architecture and parallel processing

Computer science

Computer science is the scientific and practical approach to computation and its applications. It is the systematic study of the feasibility, structure, expression, and mechanization of the methodical procedures (or algorithms) that underlie the acquisition, representation, processing, storage, communication of, and access to information. An alternate, more succinct definition of computer science is the study of automating algorithmic processes that scale. A computer scientist specializes in the theory of computation and the design of computational systems.

Scalar processor

Scalar processors represent a class of computer processors. A scalar processor processes only one datum at a time, with typical data items being integers or floating point numbers). A scalar processor is classified as a SISD processor (Single Instructions, Single Data) in Flynn's taxonomy.

Flynn's taxonomy

Flynn's taxonomy is a classification of computer architectures, proposed by Michael J. Flynn in 1966. The classification system has stuck, and has been used as a tool in design of modern processors and their functionalities. Since the rise of multiprocessing central processing units (CPUs), a multiprogramming context has evolved as an extension of the classification system.

vector processor

vector processor a single instruction operates simultaneously on multiple data items (referred to as "SIMD"). The difference is analogous to the difference between scalar and vector arithmetic.

superscalar processor

A superscalar processor, on the other hand, executes more than one instruction during a clock cycle by simultaneously dispatching multiple instructions to redundant functional units on the processor. Each functional unit is not a separate CPU core but an execution resource within a single CPU such as an arithmetic logic unit, a bit shifter, or a multiplier.

A superscalar processor is a CPU that implements a form of parallelism called instruction-level parallelism within a single processor. It therefore allows for more throughput (the number of instructions that can be executed in a unit of time) than would otherwise be possible at a given clock rate. A superscalar processor can execute more than one instruction during a clock cycle by simultaneously dispatching multiple instructions to different execution units on the processor. Each execution unit is not a separate processor (or a core if the processor is a multi-core processor), but an execution resource within a single CPU such as an arithmetic logic unit.

Parallel computing

Parallel computing is a type of computation in which many calculations are carried out simultaneously, or the execution of processes are carried out simultaneously. Large problems can often be divided into smaller ones, which can then be solved at the same time. There are several different forms of parallel computing: bit-level, instruction-level, data, and task parallelism. Parallelism has been employed for many years, mainly in high-performance computing, but interest in it has grown lately due to the physical constraints preventing frequency scaling. As power consumption (and consequently heat generation) by computers has become a concern in recent years, parallel computing has become the dominant paradigm in computer architecture, mainly in the form of multi-core processors.

Memory hierarchy

In computer architecture the memory hierarchy is a concept used for storing & discussing performance issues in computer architectural design, algorithm predictions, and the lower level programming constructs such as involving locality of reference. The memory hierarchy in computer storage distinguishes each level in the hierarchy by response time. Since response time, complexity, and capacity are related, the levels may also be distinguished by their performance and controlling technologies.

Virtual memory

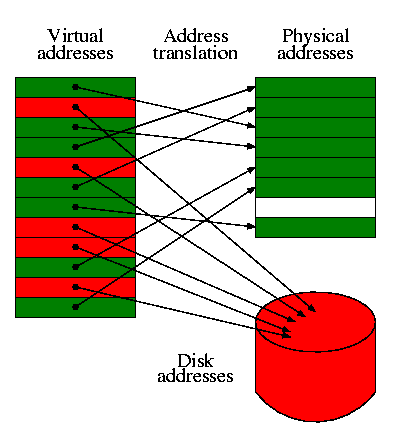

In computing, virtual memory is a memory management technique that is implemented using both hardware and software. It maps memory addresses used by a program, called virtual addresses, into physical addresses in computer memory. Main storage as seen by a process or task appears as a contiguous address space or collection of contiguous segments. The operating system manages virtual address spaces and the assignment of real memory to virtual memory. Address translation hardware in the CPU, often referred to as a memory management unit or MMU, automatically translates virtual addresses to physical addresses. Software within the operating system may extend these capabilities to provide a virtual address space that can exceed the capacity of real memory and thus reference more memory than is physically present in the computer.

Shared memory

In computer science, shared memory is memory that may be simultaneously accessed by multiple programs with an intent to provide communication among them or avoid redundant copies. Shared memory is an efficient means of passing data between programs. Depending on context, programs may run on a single processor or on multiple separate processors.

trending keywords on this topic / related keywords / trending hashtags

advanced architecture and parallel processing

parallel computer methods

the state of computing

multiprocessor and multi computers

multi vector and simd computers

pram and vlsi models

architectural development tracks

program and network properties

condition of parallelism

program partitioning and scheduling

program flow mechanism

system interconnect architecture

principles of scalable performance

performance matricides and measures

parallel processing applications

speedup performance laws

scalability analysis and approaches

processors and memory hierarchy

advanced processor technology

super scalar and vector processors

memory hierarchy technology

virtual memory technology

bus,cache and shared memory

bus system

cache memory organizations

shared memory organization

sequential and weak consistency models

pipelining and super scalar techniques

linear pipeline processors

non linear pipeline processors

instruction pipeline design

arithmetic pipeline design

parallel and scalable architectures

multiprocessor system interconnect

cache coherence and synchronization mechanism

three generations of multi computers

message passing mechanism

vector processing principles

simd computer organization

principles of multi threading

fine grain multi computers

parallel programming

parallel programming models

parallel language and compilers

dependency analysis

code optimization and scheduling

loop parallelization

mpi and pvm libraries

instruction level parallelism

design issue

models of typical processor

compiler directed instruction level parallelism

operand forwarding

tomusulo's algorithm

branch prediction

thread level parallelism